For robotics application, one of the leading set of tools is Robot Operating System (ROS). The version of ROS that runs on the Jetson AGX Xavier is ROS Melodic. Installing ROS on the Jetson Xavier is simple. Looky here:

Background

ROS was originally developed at Stanford University as a platform to integrate methods drawn from all areas of artificial intelligence, including machine learning, vision, navigation, planning, reasoning, and speech/natural language processing.

From 2008 until 2013, development on ROS was performed primarily at the robotics research company Willow Garage who open sourced the code. During that time, researchers at over 20 different institutions collaborated with Willow Garage and contributed to the code base. In 2013, ROS stewardship transitioned to the Open Source Robotics Foundation.

From the ROS website:

The Robot Operating System (ROS) is a flexible framework for writing robot software. It is a collection of tools, libraries, and conventions that aim to simplify the task of creating complex and robust robot behavior across a wide variety of robotic platforms.

Why? Because creating truly robust, general-purpose robot software is hard. From the robot’s perspective, problems that seem trivial to humans often vary wildly between instances of tasks and environments. Dealing with these variations is so hard that no single individual, laboratory, or institution can hope to do it on their own.

Core Components

At the lowest level, ROS offers a message passing interface that provides inter-process communication. Like most message passing systems, ROS has a publish/subscribe mechanism along with request/response procedure calls. An important thing to remember about ROS, and one of the reason that it is so powerful, is that you can run the system on a heterogeneous group of computers. This allows you to distribute tasks across different systems easily.

For example, you may want to have the Jetson running as the main node, and controlling other processors as control subsystems. A concrete example is to have the Jetson doing a high level task like path planning, and instructing micro controllers to perform lower level tasks like controlling motors to drive the robot to a goal.

At a higher level, ROS provides facilities and tools for a Robot Description Language, diagnostics, pose estimation, localization, navigation and visualization.

You can read more about the Core Components here.

Installation

There is a repository on the JetsonHacks account on Github named installROSXavier.

The main script, installROS.sh, is a straightforward implementation of the install instructions taken from the ROS Wiki. The instructions install ROS Melodic on the Jetson Xavier.

You can clone the repository on the Jetson:

$ git clone https://github.com/jetsonhacks/installROSXavier.git

$ cd installROSXavier

installROS.sh

The install script installROS.sh will install the prerequisites and ROS packages specified. Usage:

Usage: ./installROS.sh [[-p package] | [-h]]

-p | --package <packagename> ROS package to install

Multiple Usage allowed

The first package should be a base package. One of the following:

ros-melodic-ros-base

ros-melodic-desktop

ros-melodic-desktop-full

Default is ros-melodic-ros-base if no packages are specified.

Example Usage:

$ ./installROS.sh -p ros-melodic-desktop -p ros-melodic-rgbd-launch

This script installs a baseline ROS environment. There are several tasks:

- Enable repositories universe, multiverse, and restricted

- Adds the ROS sources list

- Sets the needed keys

- Loads specified ROS packages (defaults to ros-melodic-base-ros if none specified)

- Initializes rosdep

You can edit this file to add the ROS packages for your application.

setupCatkinWorkspace.sh

setupCatkinWorkspace.sh builds a Catkin Workspace.

Usage:

$ ./setupCatkinWorkspace.sh [optionalWorkspaceName]

where optionalWorkspaceName is the name and path of the workspace to be used. The default workspace name is catkin_ws. If a path is not specified, the default path is the current home directory. This script also sets up some ROS environment variables.

The script sets placeholders for some ROS environment variables in the file .bashrc

.bashrc is located in the home directory. The names of the variables are (they should be towards the bottom of the .bashrc file):

- ROS_MASTER_URI

- ROS_IP

The script sets ROS_MASTER_URI to the local host, and basically lists the network interfaces after the ROS_IP entry. You will need to configure these variables for your robots network configuration. Refer to the script for further details.

Notes

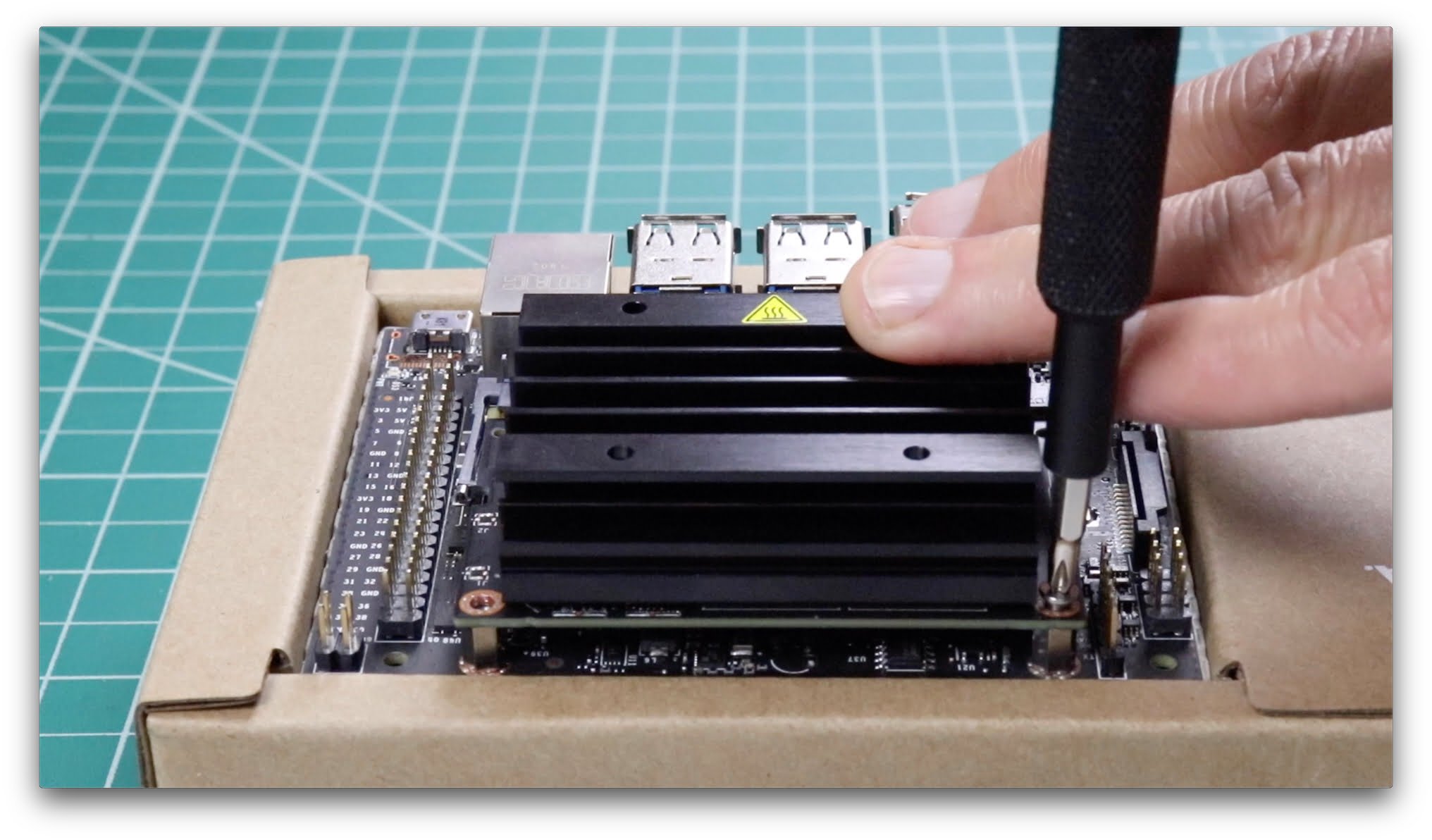

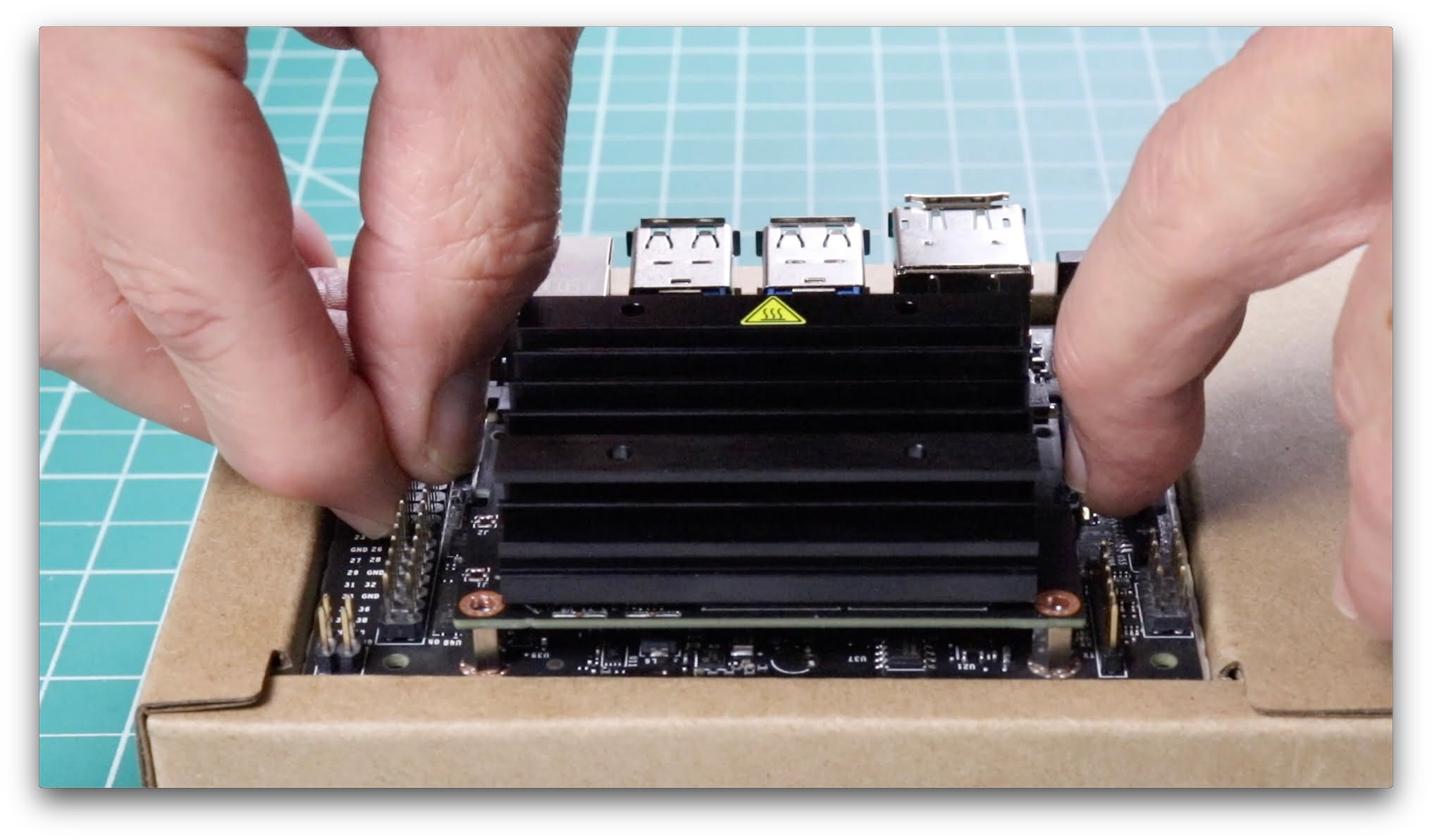

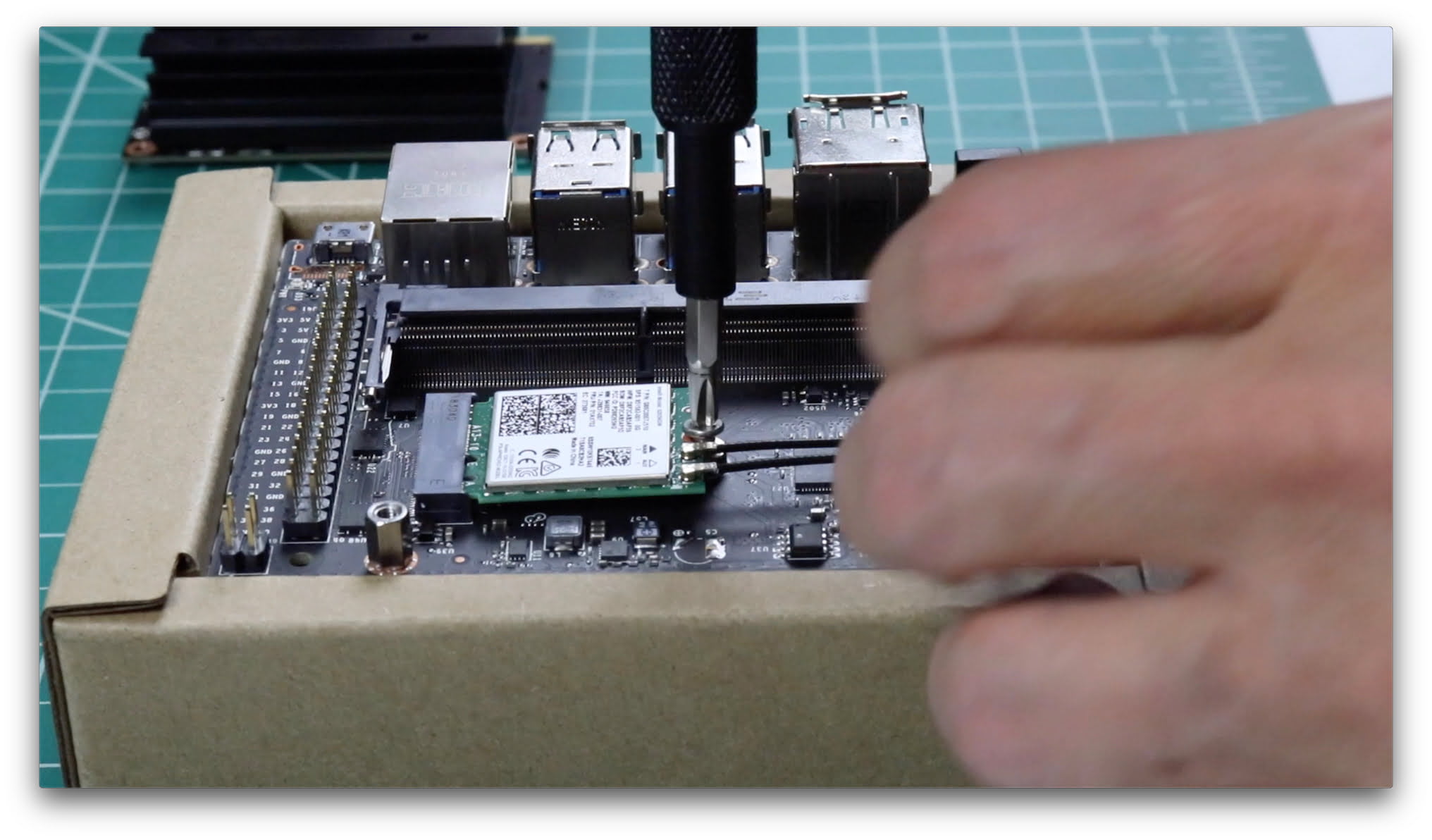

- In the video, the Jetson AGX Xavier was flashed with L4T 31.0.2 using JetPack 4.1. L4T 31.0.2 is an Ubuntu 18.04 derivative.

The post Robot Operating System (ROS) on NVIDIA Jetson AGX Xavier Developer Kit appeared first on JetsonHacks.